As Large Language Models (LLMs) continue to advance rapidly, ensuring their outputs are accurate and reliable becomes increasingly crucial. Providing effective feedback and scalable oversight on these powerful models is a significant challenge. One promising solution is leveraging LLMs themselves as “critique models” to automate this supervision process.

However, current LLM critics often provide critiques that are superficial, focusing only on the surface level of reasoning steps. This can lead to inaccurate judgments and fail to provide the detailed feedback necessary for the LLM generator to understand and correct its mistakes.

This is where DeepCritic comes in.

What is DeepCritic?

DeepCritic was created to improve Large Language Models’ ability for critique, especially in complex fields like logic and mathematics. Its main goal is to give LLM critics the ability to purposefully analyze every stage of a solution’s explanation. To ensure accuracy and depth, this involves not just reviewing the step but also performing multi-perspective verifications and even meta-critiquing the original critique.

By encouraging this methodical approach, DeepCritic generates critics that are better at spotting mistakes and offer more detailed, insightful comments to help LLM get better.

How does it work?

First, we use a really powerful LLM to create examples of good, detailed critiques. Imagine this LLM looks at a math problem and its step-by-step solution. For each step, it writes a critique. But it doesn’t stop there! It then takes that first critique and critiques. It might check the step in a different way or point out if the first critique missed something. These two critiques (the first one and the critique of the first one) are then combined into one really thorough, “long-form” critique for that step. They do this for thousands of problems and solutions to build a special dataset. They then use this dataset to train a LLM. This instructs the intended LLM on how to produce these careful, purposeful criticisms on its own.

After the LLM learns how to critique, we use a technique called Reinforcement Learning (RL) to make it even better and more accurate. At this point, the model is rewarded when it correctly determines whether a solution step is proper or wrong. Data that has already been classified as correct or incorrect by humans or data that has been automatically labeled can be used for this training. In automatic labeling, another LLM attempts many approaches to complete a solution from a given point to determine whether it arrives at the correct answer. By encouraging the critic model to enhance its purposeful checking procedure, this RL step helps it make fewer incorrect judgments.

Also Read Our Blog: Prompt Injection in LLMs

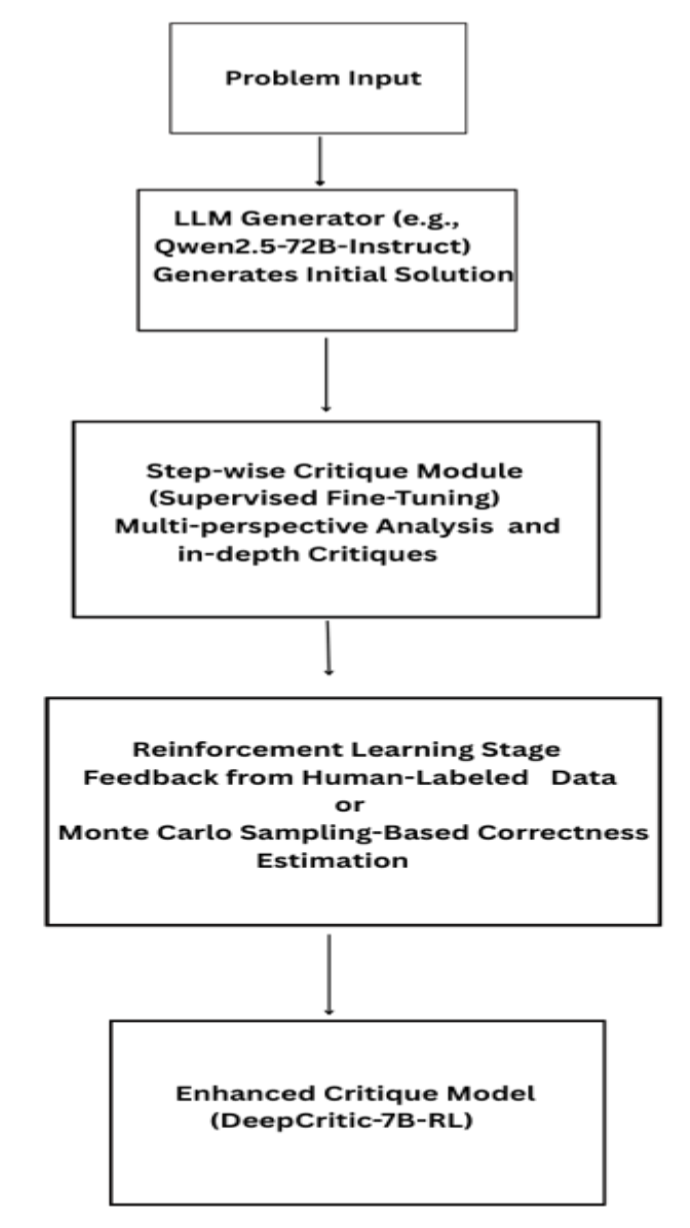

Block Diagram

Problem Input: The system receives a problem for requiring step-by-step reasoning.

LLM Generator: An initial solution is generated using a large language model, such as Qwen2.5-72B-Instruct.

Stepwise Critique Module: This module thoroughly examines every stage of the produced solution, providing critiques from multiple angles and pointing out any possible mistakes. It is trained on a dataset of 4.5K long-form critiques using supervised fine-tuning.

Reinforcement Learning Stage: Using feedback from human-labeled datasets such as PRM800K and automatically annotated data derived from Monte Carlo sampling-based correctness estimate, the critique model is further improved through reinforcement learning.

Enhanced Critique Model (DeepCritic-7B-RL): The result is a more robust critique model capable of providing detailed and accurate feedback on mathematical reasoning tasks.

Advantages of Using DeepCritic

- Improved Judgment Accuracy: By performing deliberate, in-depth, and multi-perspective critiques, DeepCritic models significantly outperform existing LLM critics and process reward models on various error identification benchmarks.

- More Informative Feedback: The long-form, detailed critiques provide much richer feedback compared to shallow critiques, offering better guidance for LLM generators to understand and correct their errors.

- Scalable Oversight: By using automatically labeled data for RL, the framework opens the door to more automated supervision of LLMs that is more scalable and does not rely entirely on expensive human annotation.

- Enhanced LLMs Performance: DeepCritic can improve LLM performance, either by acting as a more accurate verifier in techniques like majority voting or by providing effective feedback for critique-based refinement.

Also Read Our Blog: B2B User Registration in SAP Commerce

Disadvantages of Using DeepCritic

- Data Dependency: The framework relies on a substantial dataset of carefully curated deliberate critiques for the initial Supervised Fine-Tuning

- Computational Cost: It takes a lot of processing power to train good LLMs and produce large amounts of critique data.

Conclusion

DeepCritic represents a significant step forward in developing more capable and reliable LLM critics. Through the introduction of the concept of intentional critique, which involves meta-critiquing and multi-perspective analysis, the framework allows LLMs to provide more insightful feedback and more accurate error identification. This work shows how to combine reinforcement learning and supervised fine-tuning on properly created data to create a potent pipeline for improving critiquing abilities. DeepCritic’s superior performance and potential for scalable oversight highlight its importance in the ongoing effort to build more trustworthy and self-improving AI systems.