INTRODUCTION

In a world where accurate predictions drive business decisions, time series forecasting plays a pivotal role. From predicting stock prices to managing supply chains, the needs for precise forecasting models are ever-increasing. Enter the attention-based encoder-decoder architecture, a groundbreaking approach transforming how we handle sequential data.

This blog unpacks the inner workings of Encoder-Decoder model, why it’s effective and its vast potential across industries.

Understanding The Encoder-Decoder Model

The attention-based encoder-decoder model stems from advancements in neural networks particularly in natural language processing. However, its application in time series forecasting is gaining traction. Here’s an overview:

Encoder: Processes historical time series data compressing it into a compact representation (context vector)

Attention Mechanism: Identifies and weights the most critical points in the historical data ensuring the model focuses on relevant patterns.

Decoder: Leverages the context vector and attention weights to predict future values in the sequence.

This architecture allows the model to overcome traditional limitations, such as fixed-length context vectors, by dynamically adapting to the data’s complexities.

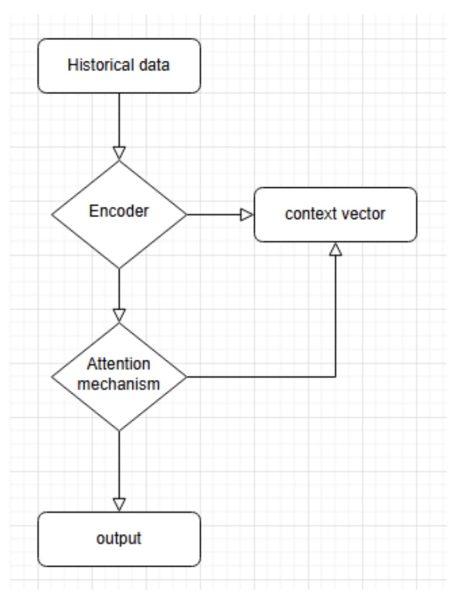

How Encoder-Decoder Model Works: A Flowchart Overview

Step-by-Step Process:

- Input Processing: Historical time series data is segmented and fed into the encoder.

- Encoding: The encoder transforms input data into a compact context vector.

- Attention: The attention mechanism dynamically assigns importance to various time steps in the input sequence.

- Decoding: The decoder generates predictions using the context vector and attention weights.

- Output: The model produces a sequence of a forecasted values.

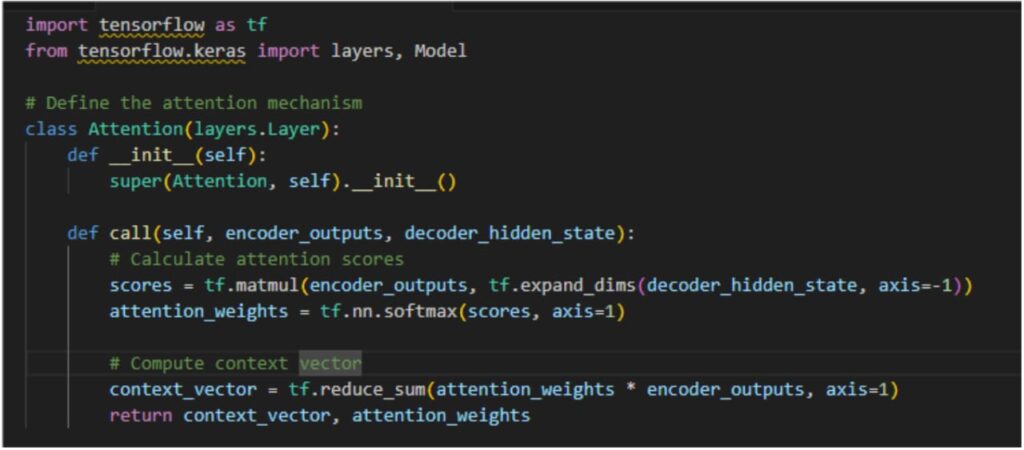

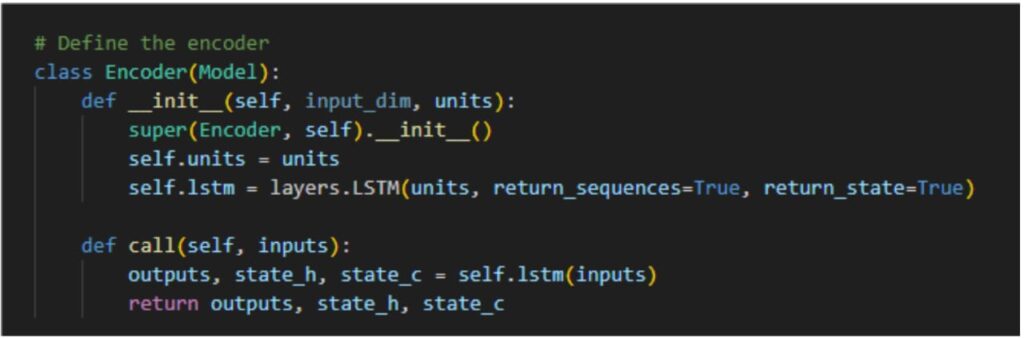

CODE SNIPPET :

- Import libraries and define attention mechanism

- Define Encoder

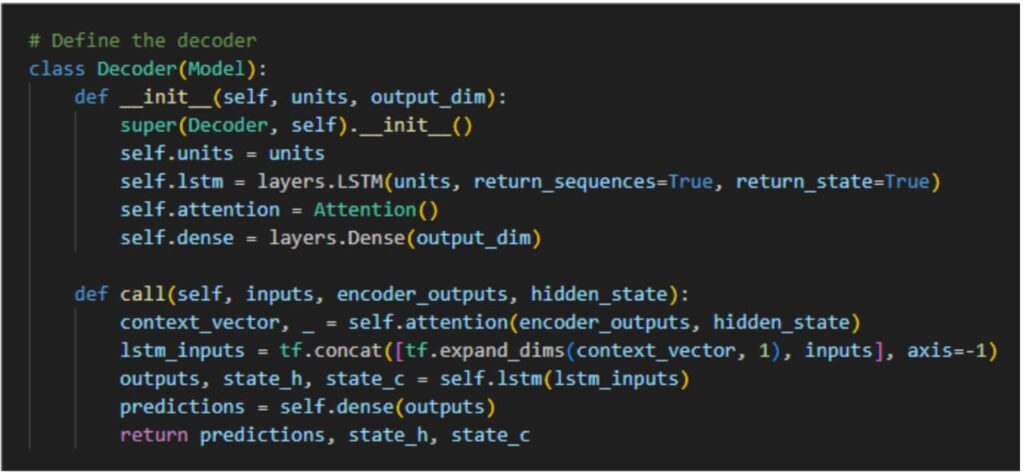

- Define Decoder

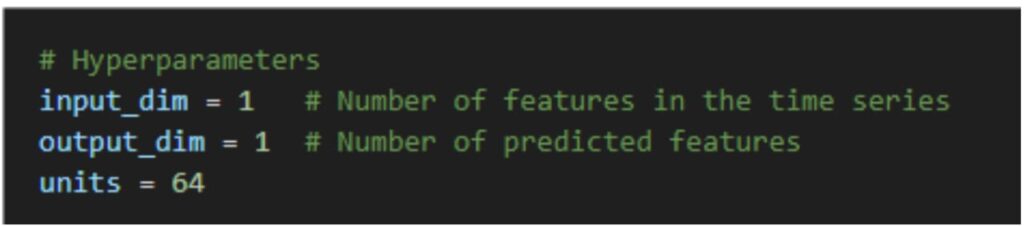

- Set Hyperparameters

- Build the Model

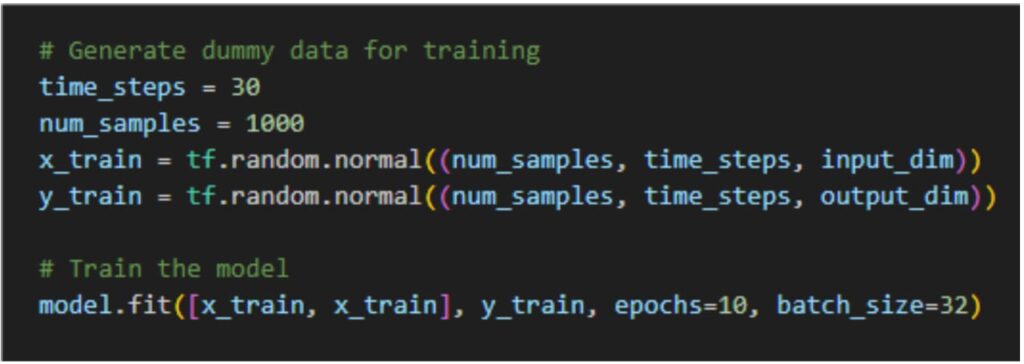

- Train the Model

HOW IT WORKS:

The key innovation lies in the attention mechanism, which allows the model to selectively focus on critical time steps. Unlike traditional models:

- Dynamic Focus: The attention mechanism dynamically shifts its focus across input data points.

- Better Context Understanding: It considers the entire sequence context, making predictions more robust.

- Scalability: The model can handle long sequences without suffering from information loss, a common issue in recurrent networks.

BENEFITS:

- Accuracy: improved forecasting precision y focusing on relevant data points.

- Flexibility: Handles variable-length input sequences seamlessly.

- Interpretable Outputs: The attention weights provide insights into the mode’s focus areas.

- Wide applicability: Works across various domains requiring time series forecasting.

USE CASES:

- Finance: Stock price and risk modeling.

- Energy: Demand forecasting for efficient grid management.

- Retail: Predicting scales trends and inventory optimization.

- Healthcare: Patient monitoring and predicting medical events.

- Transportation: Predicting traffic pattern and optimizing logistics.

CONCLUSION:

The attention-based encoder-decoder model represents a significant leap in time series forecasting. By incorporating the attention mechanism, it addresses challenges like context loss and static representations, enabling more accurate and interpretable prediction. As industries continue to embrace data-driven strategies, this model promises to become a cornerstone of forecasting solutions.

Whether you’re managing supply chains or trading stocks attention-based models provide the accuracy and insights to stay in today’s fast-paced world.