Large language models (LLM)s have demonstrated remarkable capabilities in complex reasoning tasks, particularly through Chain-of-Thought (cot) prompting. However, the use of extended reasoning paths (Long-CoT) often leads to increased inference costs and latency. To address this, the paper “Ada-R1: Hybrid-CoT via Bi-Level Adaptive Reasoning Optimization” introduces a novel framework that adaptively selects between long and short reasoning paths, optimizing both performance and efficiency.

What is Ada-R1?

Ada-R1 is a two-stage framework designed to enhance reasoning efficiency in LLMs by :

- Hybrid Reasoning Model: Combining Long-CoT and Short-CoT models to enable diverse reasoning styles.

- Bi-level Preference Training: Guiding the model to select suitable reasoning styles at the group level and preferring concise and correct reasoning within each style group at the instance level.

This approach allows the model to adapt its reasoning depth based on the complexity of the input, reducing unnecessary computational overhead.

How does Ada-R1 work ?

Stage I : Hybrid Reasoning Model

in this stage, Ada-R1 merges Long CoT and Short CoT models to create a unified model capable of generating both types of reasoning paths. This is achieved by linearly combining the parameters of the two models :

θH = αθL+(1−α)θS

Where:

- ΘH: Parameters of the hybrid model

- θL: Parameters of the Long-CoT model

- θS : Parameters of the Short-CoT model

- α: Merging coefficient balancing the contribution from each model

This merged model inherits the capacity to generate both long and short CoT depending on the input.

Stage II : Bi-Level preference Training

This stage involves fine-tuning the hybrid model to make adaptive reasoning decisions :

- Group Level Preference: The model learns to choose between long and short reasoning styles based on the input’s complexity.

- Instance-Level Preference: Within the chosen reasoning style, the model learns to generate concise and correct reasoning paths.

This Bi-level training enables the model to adapt its reasoning strategy, dynamically optimizing both accuracy and efficiency.

Also Read Our Other Blogs: Business Process in SAP Hybris Commerce Cloud

Code snippet

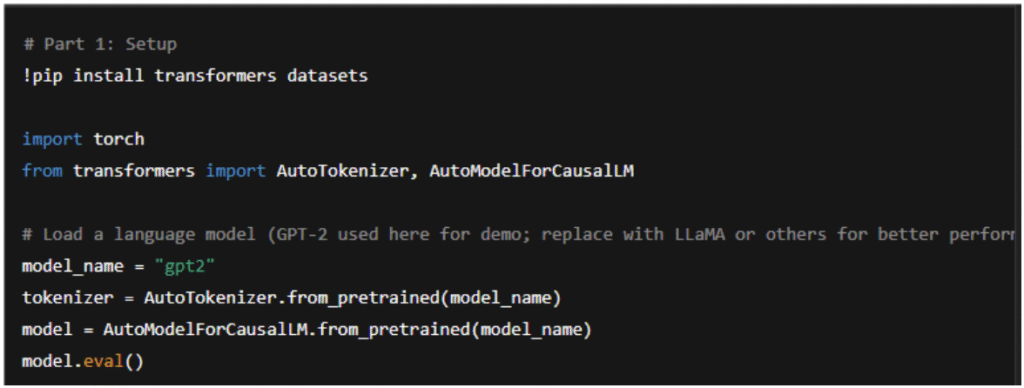

Part 1: Setup and Load Model

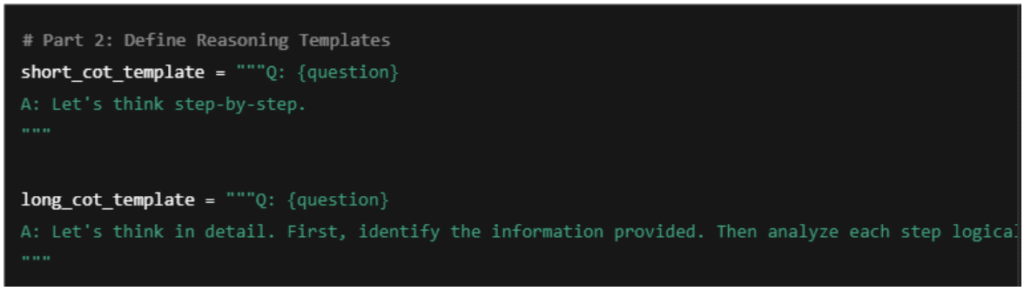

Part 2: Define Short-CoT and Long-CoT Prompt Templates

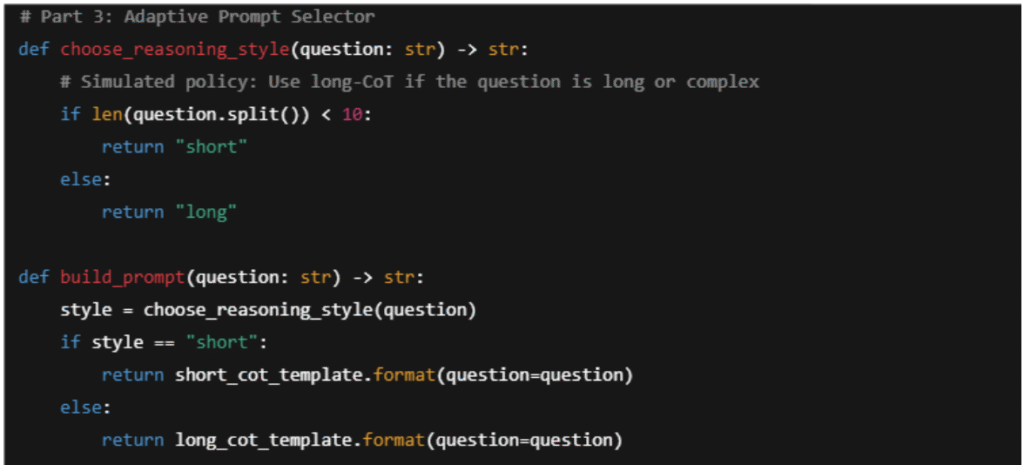

Part 3: Adaptive Reasoning Style Selection (Heuristic Version)

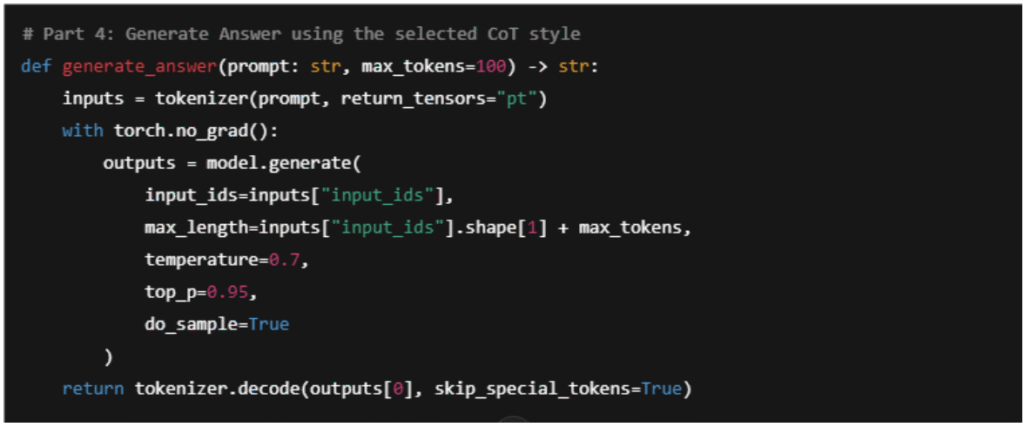

Part 4: Generate Model Response

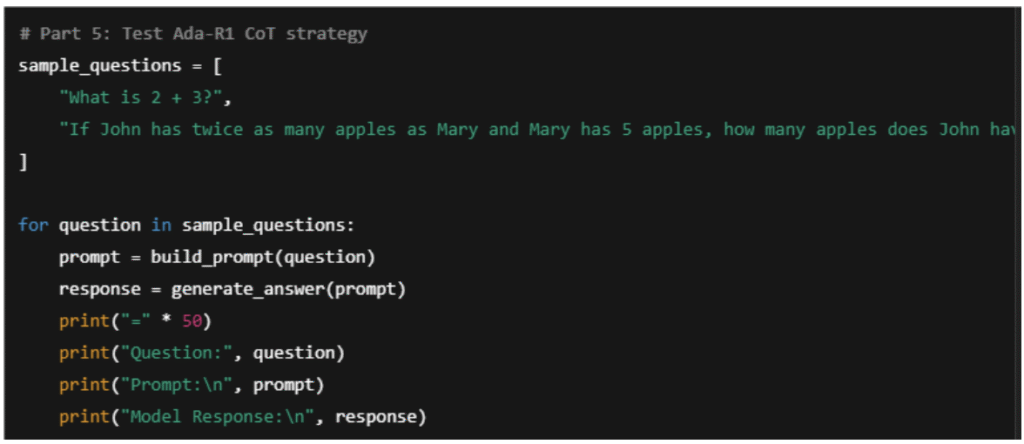

Part 5: Run Test Questions

Why Use Ada-R1 ?

- Efficiency: By adapting reasoning depth, Ada-R1 reduces unnecessary computation , leading to faster inference times.

- Flexibility: The hybrid model can handle a wide range of tasks by selecting appropriate reasoning strategies.

- Performance: Despite reduced reasoning lengths, Ada-R1 maintains or even improves accuracy on complex tasks.

Benefits of Ada-R1

- Reduced inference costs: Ada-R1 significantly lowers the average length of reasoning, decreasing the computational resources required.

- Maintained Accuracy: The adaptive approach ensures that performance is not compromised while improving efficiency.

- Scalability: Ada-R1’s framework can be applied to various LLMs, making it a versatile solution for different applications.

Also Read Our Other Blogs: What is Promptbreeder

Use Cases of Ada-R1

- Resource Constrained Environments: Ada-R1 enables efficient reasoning in settings with limited computational resources.

- Real Time Applications: The reduced inference time makes Ada-R1 suitable for applications requiring quick responses.

- Domain-Specific Tasks: Ada-R1 can adapt reasoning strategies for specialized domains like legal or medical text analysis.

Conclusion

Ada-R1 presents an innovative approach to optimizing reasoning in LLMs by adaptively selecting reasoning depths based on input complexity. Its two-stage framework effectively balances performance and efficiency, making it a valuable contribution to the field of natural language processing.